Claude Code

Among the LLM communities, there’s little argument that Anthropic’s Claude models are the kings of coding. The company has taken a unique stance on making the models more niche, targeting software engineers more, and a little less of the broader demographic that say Chat GPT targets. This is evident in public benchmarks, private benchmarks, and even self evident through my own personal usage.

Anthropic’s latest tool for software developers is it’s Claude Code, which I personally use through the Claude Code for VSCode extension. These tools provide the similar chat interface most are used to, with powerful integrations to the Anthropic Claude models. You’ll find that Claude Code is able to merge your messages with context of your code base, and also enrich itself with web searches.

While powerful, access to Claude Code requires one of its paid pricing subscriptions. At the time of writing this, those tiers are:

- Pro $20/month

- Max 5x $100/month

- Max 20x $200/month

the more expensive the tier, the higher the usage limits you can enjoy.

If you’re not careful, you’re able to quickly eat up your alloted usage limits. I recommend you read the entire Anthropic Claude Code Best Practices. The two biggest pieces of advice in there are using the /clear command to clear context, and to leverage CLAUDE.md files. The former will both reduce the number of tokens you are burning through, and allow the model to keep focused on your current task at hand. While the latter allows you to write configurations at various levels.

I am finding that I like to maintain a CLAUDE.md file in my project, detailing specific context, desires, and ask. While also having a CLAUDE.md file in my home directory, which houses details of my desires that transcend projects. An example of the former might detail that I like my Python code to be type hinted, while an example of the latter might say to ensure that we write unit tests for any additional functions we add, and ensure that they fail before we make them pass with new source code.

Integrating Claude Code and Google’s Gemini

While Claude remains king, it is expensive. Google recently released its Gemini CLI tool, which I interact with by generating an API key here, and saving the result to a credentials.json file. At the time of writing this, Google has allowed a very generous free tier to exist. I’ve been able have the two work in conjunction by allowing Claude Code to invoke Gemini. This allows one to offset the cost of Claude, but still leverage it’s high performance.

To achieve this, I have included instructions in my ~/CLAUDE.md, for Claude to leverage the Gemini CLI. Something as simple as this has been effective:

## Core Philosophy: Gemini CLI Orchestration

**CRITICAL: Claude Code should use the Gemini CLI to do as much of the thinking and processing as possible. Claude Code should only act as the orchestrator, using as little tokens itself as possible.**

To achieve this, Claude Code should invoke Gemini like this:

```bash

$ gemini --prompt "ABC"

```

This approach ensures:

- Minimal token usage by Claude Code

- Efficient processing through Gemini CLI

- Claude Code focuses on orchestration rather than heavy computation

There are countless discussions of this on the web. For example, see this Reddit post, where the author has a much more detailed CLAUDE.md file:

# Using Gemini CLI for Large Codebase Analysis

When analyzing large codebases or multiple files that might exceed context limits, use the Gemini CLI with its massive

context window. Use `gemini -p` to leverage Google Gemini's large context capacity.

## File and Directory Inclusion Syntax

Use the `@` syntax to include files and directories in your Gemini prompts. The paths should be relative to WHERE you run the

gemini command:

### Examples:

**Single file analysis:**

gemini -p "@src/main.py Explain this file's purpose and structure"

Multiple files:

gemini -p "@package.json @src/index.js Analyze the dependencies used in the code"

Entire directory:

gemini -p "@src/ Summarize the architecture of this codebase"

Multiple directories:

gemini -p "@src/ @tests/ Analyze test coverage for the source code"

Current directory and subdirectories:

gemini -p "@./ Give me an overview of this entire project"

# Or use --all_files flag:

gemini --all_files -p "Analyze the project structure and dependencies"

Implementation Verification Examples

Check if a feature is implemented:

gemini -p "@src/ @lib/ Has dark mode been implemented in this codebase? Show me the relevant files and functions"

Verify authentication implementation:

gemini -p "@src/ @middleware/ Is JWT authentication implemented? List all auth-related endpoints and middleware"

Check for specific patterns:

gemini -p "@src/ Are there any React hooks that handle WebSocket connections? List them with file paths"

Verify error handling:

gemini -p "@src/ @api/ Is proper error handling implemented for all API endpoints? Show examples of try-catch blocks"

Check for rate limiting:

gemini -p "@backend/ @middleware/ Is rate limiting implemented for the API? Show the implementation details"

Verify caching strategy:

gemini -p "@src/ @lib/ @services/ Is Redis caching implemented? List all cache-related functions and their usage"

Check for specific security measures:

gemini -p "@src/ @api/ Are SQL injection protections implemented? Show how user inputs are sanitized"

Verify test coverage for features:

gemini -p "@src/payment/ @tests/ Is the payment processing module fully tested? List all test cases"

When to Use Gemini CLI

Use gemini -p when:

- Analyzing entire codebases or large directories

- Comparing multiple large files

- Need to understand project-wide patterns or architecture

- Current context window is insufficient for the task

- Working with files totaling more than 100KB

- Verifying if specific features, patterns, or security measures are implemented

- Checking for the presence of certain coding patterns across the entire codebase

Important Notes

- Paths in @ syntax are relative to your current working directory when invoking gemini

- The CLI will include file contents directly in the context

- No need for --yolo flag for read-only analysis

- Gemini's context window can handle entire codebases that would overflow Claude's context

- When checking implementations, be specific about what you're looking for to get accurate results

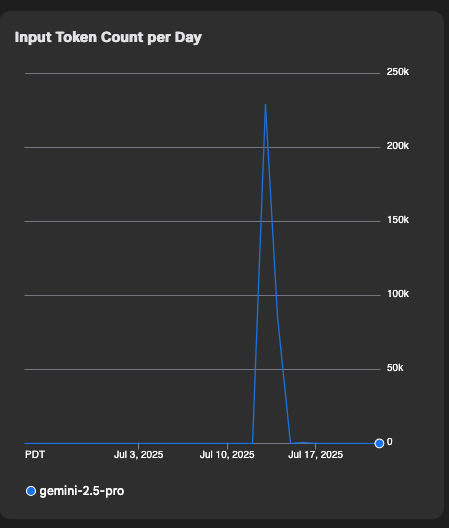

In preparation for this blog post, I was trying out my first “vibe code” session, where I had Claude Code and Gemini write me an entire project’s repository from scratch. Here’s an example of the token usage that this exercise incurred:

As with all AI tools, be aware of the data, and training policies of the tools you are using.

In the future, I’m interested in playing with the zen MCP server, which has some preconfigured actions you can ask to be performed, and an array of LLMs to use.

Overall, I believe a blend of models each being used for specific tasks that they excel at, will be a standard practice. Similar to the mixture of experts (MOE) design some models employ internally.