If you haven’t already, give the previous article in this series a read, as it will walk you through how to self host LLMs by running Ollama. This article leaves you in a situation where you can only interact with a self hosted LLM via the command line, but what if we wanted to use a prettier web UI? That’s where Open WebUI (formally Ollama WebUI) comes in.

I’m partial to running software in a Dockerized environment, specifically in a Docker Compose fashion. For that reason, I’m typically running Ollama WebUI with this docker-compose.yaml file:

version: '3.8'

services:

# From previous blog post. Here for your convenience ;)

ollama:

image: ollama/ollama:latest

container_name: ollama

ports:

- 11434:11434

volumes:

- /home/ghilston/config/ollama:/root/.ollama

tty: true

# If you have an Nvidia GPU, define this section, otherwise remove it to use

# your CPU

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

restart: unless-stopped

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

volumes:

- /home/ghilston/config/ollama/ollama-webui:/app/backend/data

depends_on:

- ollama

ports:

- 8062:8080

environment:

- '/ollama/api=http://ollama:11434/api'

extra_hosts:

- host.docker.internal:host-gateway

restart: unless-stopped

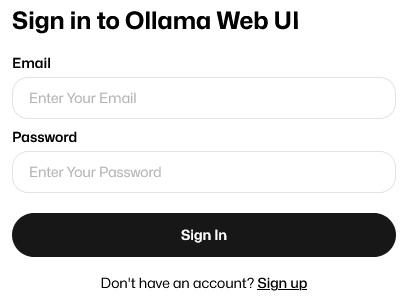

Then I can start Ollama WebUI by running $ docker compose up, which will download the image from the remote repository and start the container. At this point we can interact with the application using your favorite web browser by navigating to http://localhost:11434, or in my case I replace localhost with the IP address of my home server. This will up the Web UI and should look something like this:

Click the Sign Up button and create an account for yourself, and login. Or disable the need to create accounts by setting another environment variable of WEBUI_AUTH=False.

Then you’ll have access to the application and it should look like this:

At this point we need to go to the top of the web UI, and click on the dropdown by Select a model and type in a model name we wish to download and use. As a blanket small model recommendation, I’d suggest trying out mistral:7b. This will download the 3.8GB model and make it available. Once its downloaded, select the model from the drop down. At this point, you’re ready to interact with the model.

Like we’ve done in the previous blog post, let’s type in this prompt:

Write me a Haiku about why large language models are so cool

and you’ll get a response similar to this:

Model’s vast domain,

Data’s intricacies explored,

AI’s wisdom grows.

At this point you’re ready to interact with your locally running LLM using a pretty web UI!