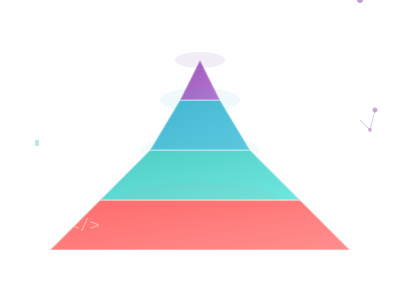

I’ve been experimenting with integrating LLMs into software development for quite some time now. Having played with countless libraries, frameworks, and tools, I’ve identified what I believe are the key tiers of integration individuals will experience today.

Level 1: “Copy + Paste” - Human driven with AI Consultation

I believe the first situation developers will experience is simply using a web based chat LLM interface like chatgpt, claude, gemini, deepseek, or even the self hosted open-webui. Regardless of the chosen tool, the interaction is the same. You type up your request, and manually copy and paste any relevant content from your code base. The model will reply, and you’ll copy and paste any relevant portions of the response back into your code base.

At first, this will seem like magic, and it is, especially when you recall that famous Arthur C. Clarke quote of:

Any sufficiently advanced technology is indistinguishable from magic

With so much copying and pasting, those that like to optimize their workflow will be longing for a better solution.

Level 2: “MCP” - Human driven with AI Consultation

The next level is a minor change, with a pretty big impact. It’s switching from just chat, to a chat that is plugged into function, and tool calling. I personally tend to perform this through what’s called Model Context Protocol servers. I’ve written more about that topic in my “Model Context Protocol (MCP): The Future of LLM Function Calling " blog post.

Essentially an MCP server acts as a bridge for an LLM to call traditional code and leverage the results as part of its response. This is incredibly powerful, as it grants you a natural language to interact with traditional code. Some useful MCP servers are file access, current and forecasted weather, integration with remote REST APIs or databases, etc. The only limitation is your imagination and ability to write code.

I’ve had great success using the official modelcontextprotocol/python-sdk, and modelcontextprotocol/typescript-sdk libraries to write my own. I’ve typically integrated my chat with MCP Servers by using Claude on the desktop.

Essentially your workflow stays mostly the same. You still are chatting back and forth, and copying and pasting to the request, and from the response. However, depending on which MCP Servers you set up, the LLM may be able to enumerate some of the context itself. For example, by integrating the filesystem MCP server, the LLM can search, read, and write files, and more. This means less of the back and forth to build up the model’s context of the situation.

In the future I plan to try out Open Web UI’s support MCP Servers, which did not exist at the time I was experimenting with this level of LLM usage.

Level 3: “Code/Editor/IDE Integration” - Human driven with AI Assistance

The next level is using some sort of AI assistance that is plugged into your codebase, editor or IDE. Examples of these would be the cursor editor, the windsurf editor, the cline extension, the roo code extension, or Github’s copilot. The main benefit to these tools is the integration into your code’s directory and editor.

Its important to note that most of these tools also integrate with MCP Servers as well.

While main interface to the LLM will still be a chat based one, you’ll also find that many of these tools will have auto generated code suggestions as you type. I often do not use this auto complete feature, as I find that it lacks additional context on how I want to implement each piece of code.

Let’s walk through an example. I often write my function docstrings first, so I might write:

def calculate_compound_interest(principal: float, rate: float, time: int, n: int=1) -> float:

"""

Calculate compound interest using the formula A = P(1 + r/n)^(nt)

Args:

principal: Initial amount of money

rate: Annual interest rate (as decimal, e.g., 0.05 for 5%)

time: Number of years

n: Number of times interest is compounded per year

Returns:

Final amount after compound interest

"""

and my tool of choice might auto generate

return principal * (1 + rate/n) ** (n * time)

The main benefit I find to this level of LLM integration is the tool’s ability to automatically read additional context about your codebase. If we were importing other classes, files, etc, the LLM would be able to go out and read those files, with or without human permission intervention, depending on your settings.

Roo Code Example

Let’s walk through that example using one of my favorite tools, the Roo Code extension.

First off, I am no longer copying and pasting the files into the chat window. Roo Code will search your files, and read what’s appropriate. You can have granular control on what it is automatically allowed, or it will pause and ask for approval for each action. For example, I often allow READ ALL to be enabled. That would look like this:

If you have specific context in mind that you’d like to bring to the model’s attention, there is a convenient “Add to context” button you can click, to automatically send your highlight to the chat window. It will look something like this:

Which will add the file path, line numbers, and code to the chat:

Once you submit your message, the LLM will propose changes. Which would look like this:

You’re able to accept them as is, modify them right then and there, or fire back another message adding in more context or requested changes.

I cannot overstate how much more convenient this iteration loop is compared to levels 1, and even 2.

Level 4: “Human In The Loop” - AI Driven with Human Oversight

This level of LLM tooling is one I’m still experimenting with. It’s when the LLM handles much more of the iterations, and is a bit of a blurred line between level 3. I mostly made this level to be able to specifically state how incredibly powerful Claude Code is.

Claude Code is the tooling that Anthropic has written around their Claude LLMs. While all of the previous levels I’ve mentioned have used Claude under the hood, this is the first level that really integrates with Claude, giving you as much “AI powered” coding as possible. This is where “vibe coding” can really be tried out.

Claude Code provides a terminal based integration, but I’ve personally really been enjoying the Claude Code for VSCode extension, which more conveniently can show you the changes its making.

Essentially your workflow is the same as level 3. You are still in the loop to approve things, and can have things be auto approved if you want. There’s even a way to run it with “auto approve everything”: $ claude --dangerously-skip-permissions to bypass all permission checks. I have not used this, nor would I without say using some throw away container with only my source code mounted, say by using this devcontainer approach.

Fortunately, Claude Code is accessible with small limits via their Pro plan, which is ~$20/month. Their more expensive plans increase the limits.

As the tooling improves, I believe we’ll be relying less and less on websites like Stack Overflow, and other Q&A style searching. While there is no replacement for reading actual documentation, I believe the interactive process of working with LLMs to develop, test, and document software is here to stay.